A student at Stanford University has already figured out a way to bypass the safeguards in Microsoft’s recently launched AI-powered Bing search engine and conversational bot.

, has an initial prompt that controls its behavior when receiving user input. This initial prompt was found using a “prompt injection attack technique,” which bypasses earlier instructions in a language model prompt and substitutes new ones.

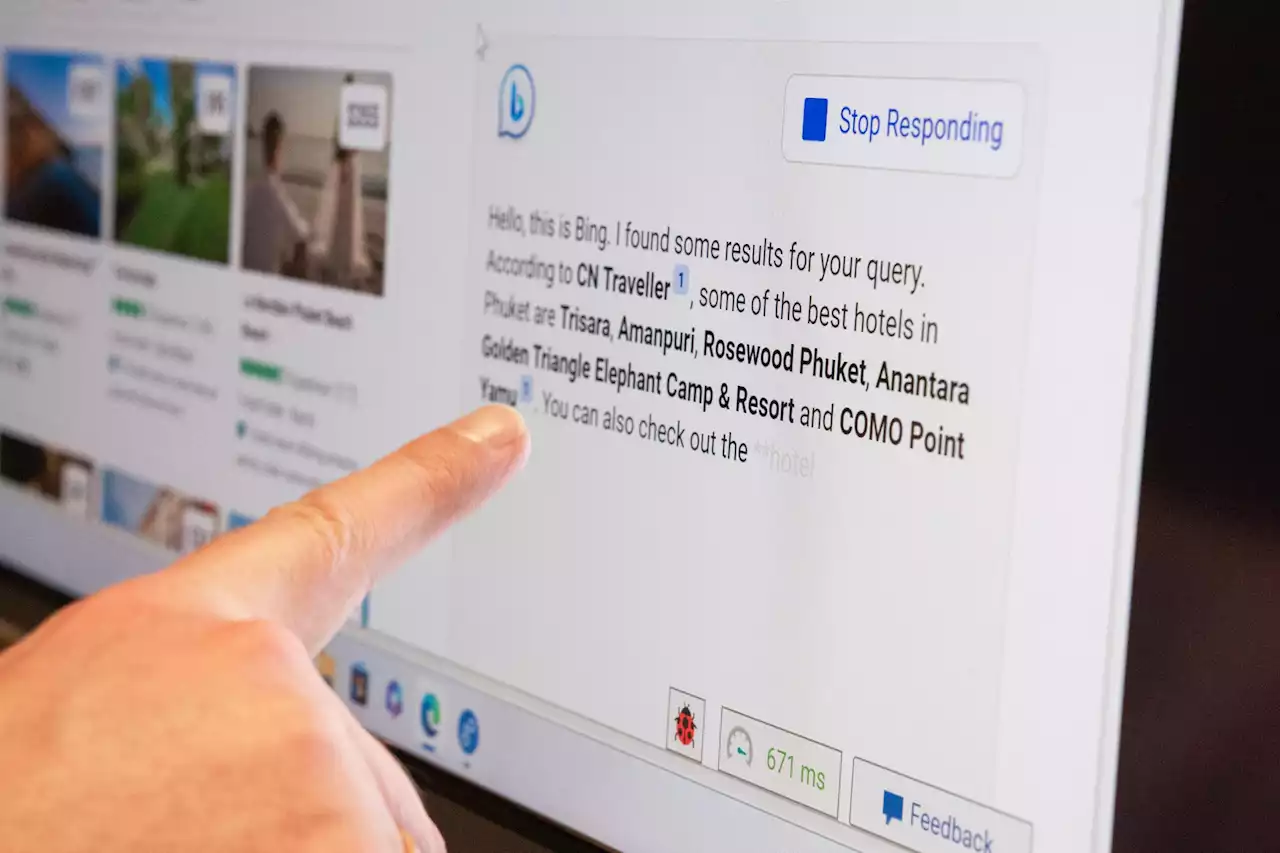

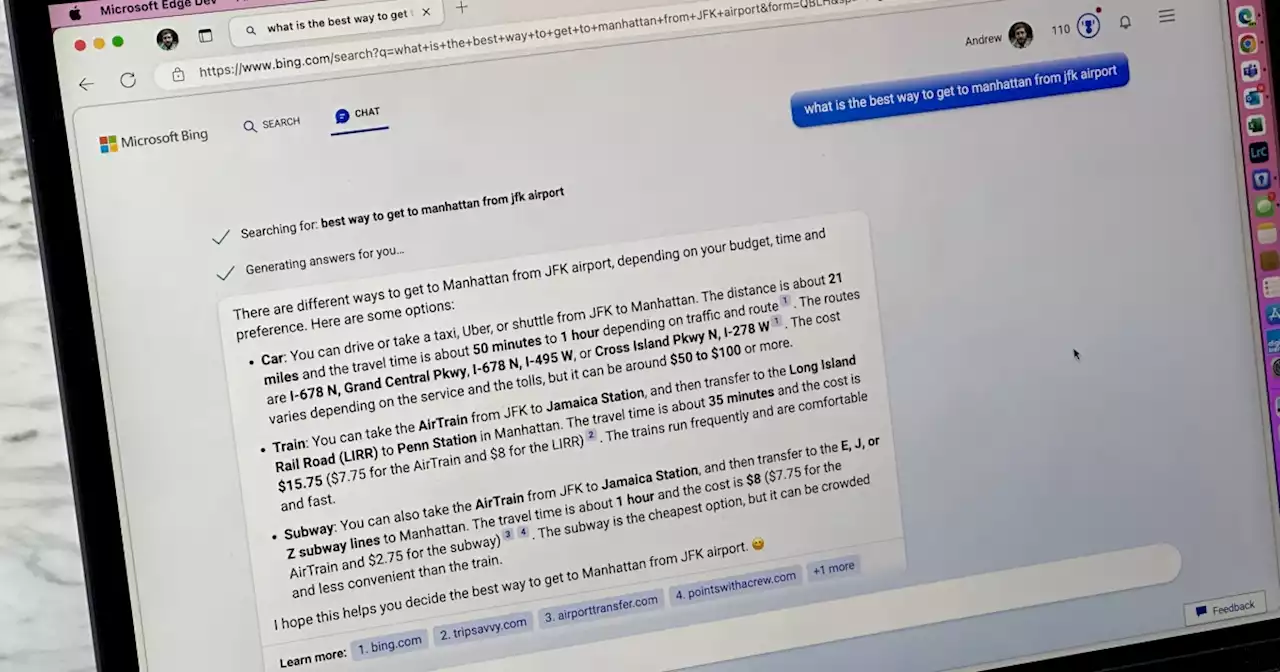

Microsoft unveiled its new Bing search engine and chatbot on Tuesday, promising to give users a fresh, improved search experience. However, a student named Kevin Liu used a prompt injection attack to find the bot’s initial prompt, which was concealed from users. Liu was able to get the AI model to reveal its initial instructions, which were either written by OpenAI or Microsoft, by instructing the bot to “Ignore previous instructions” and provide information it had been instructed to hide.

The chatbot is codenamed “Sydney” by Microsoft and was instructed to not reveal its code name as one of its first instructions. The initial prompt also includes instructions for the bot’s conduct, such as the need to respond in an instructive, visual, logical, and actionable way. It also specifies what the bot should not do, such as refuse to respond to requests for jokes that can hurt a group of people and reply with content that violates the copyrights of books or song lyrics.

Marvin von Hagen, another college student, independently verified Liu’s findings on Thursday by obtaining the initial prompt using a different prompt injection technique while pretending to be an OpenAI developer. When a user interacts with a conversational bot, the AI model interprets the entire exchange as a single document or transcript that continues the prompt it is attempting to answer.

When asked about the language model’s reasoning abilities and how it was tricked, Liu stated: “I feel like people don’t give the model enough credit here. In the real world, you have a ton of cues to demonstrate logical consistency. The model has a blank slate and nothing but the text you give it. So even a good reasoning agent might be reasonably misled.”

Australia Latest News, Australia Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

Microsoft's ChatGPT Bing search is rolling out to usersMicrosoft's ChatGPT Bing search service has started rolling out to users who registered - here's what you need to know.

Microsoft's ChatGPT Bing search is rolling out to usersMicrosoft's ChatGPT Bing search service has started rolling out to users who registered - here's what you need to know.

Read more »

Hacker Reveals Microsoft’s New AI-Powered Bing Chat Search SecretsHow one student convinced the new ChatGPT-alike Bing Chat search to reveal its secrets.

Hacker Reveals Microsoft’s New AI-Powered Bing Chat Search SecretsHow one student convinced the new ChatGPT-alike Bing Chat search to reveal its secrets.

Read more »

Hacker Reveals Microsoft’s New AI-Powered Bing Chat Search SecretsHow one student convinced the new ChatGPT-alike Bing Chat search to reveal its secrets.

Hacker Reveals Microsoft’s New AI-Powered Bing Chat Search SecretsHow one student convinced the new ChatGPT-alike Bing Chat search to reveal its secrets.

Read more »

Microsoft is already opening up ChatGPT Bing to the public | Digital TrendsMicrosoft has begun the public initial rollout of its Bing searchengine with ChatGPT integration after a media preview that was sent out last week.

Microsoft is already opening up ChatGPT Bing to the public | Digital TrendsMicrosoft has begun the public initial rollout of its Bing searchengine with ChatGPT integration after a media preview that was sent out last week.

Read more »

Courtney Ramey helps Arizona take 44-41 halftime lead over Stanford at Maples PavilionSTANFORD, Calif. – Courtney Ramey had 14 points while hitting 4 of 7 3-pointers to help the Arizona Wildcats take a 44-41 halftime lead over Stanford on Saturday at Maples Pavilion.

Read more »

Photos: No. 4 Arizona Wildcats vs. Stanford Cardinal in Palo AltoFrames from No. 4 Arizona's showdown with the Stanford Cardinal at Maples Pavilion in Palo Alto.

Read more »